Scrape with BeautifulSoup

Your Best Friend, and Worst Enemy

So let’s get started - the ark of this project will cover the grocery store scraping project from start to finish! This will be out first project and by the end of this section you will be able to scrape data from the web, store it in a google sheets “database”, and grab that information from the “database” and put it on a webpage!

You might consider setting up a GitHub to store your projects for the future, which you can read about by going to www.google.com!

Make a new folder and name it whatever you like (Im going to name it ScrapeWell_Groc_1, then create your first file in that folder for this script - I will name mine ScrapeWell_Les_1.py)

Also, I will bold everything that “changes” from code block to code block. Note, as this is a new Substack, click the button below if it is not already filled in to get registered as “Signed Up” (Im sorry about the confusion, this is my first time making a newsletter :o)

Also consider joining the Discord community!

To start we want to install the following libraries:

pip install requestsWhy? This package “connects” us to the internet and allows us to make “requests” (hahahaha -_- I know) to get data from the internets (like I said, humor).

and

pip install bs4Why? This package is like the kid who demystified math in school for you, it takes the gibberish html (seriously its bad) and puts it into a format you can work with.

Linked is the documentation for requests and bs4. I’d like to say I’ve read through both but let’s be real, I haven't - I only go when I have an error… often.

OK so we have the packages, let’s hope you’re not lost, if you are well, www.google.com?

Next: actually grab the webpage from the internet, how do we do this?

import requests

import bs4

from bs4 import BeautifulSoup

page_url = "https://www.wholefoodsmarket.com/sales-flyer?store-id=10005" #this is the website we want to scrape

page_sourced = requests.get(page_url) #we "search" for the website

print(page_sourced)

'''Output should be: <Response [200]> - this means there was a valid response'''Nice, so pause - it is always good to first check the Response as you can sometimes find key information here like Response 429 which can mean you are scraping too fast, 405 which means you are not allowed to be accessing the content (oh yea there are ways around this)

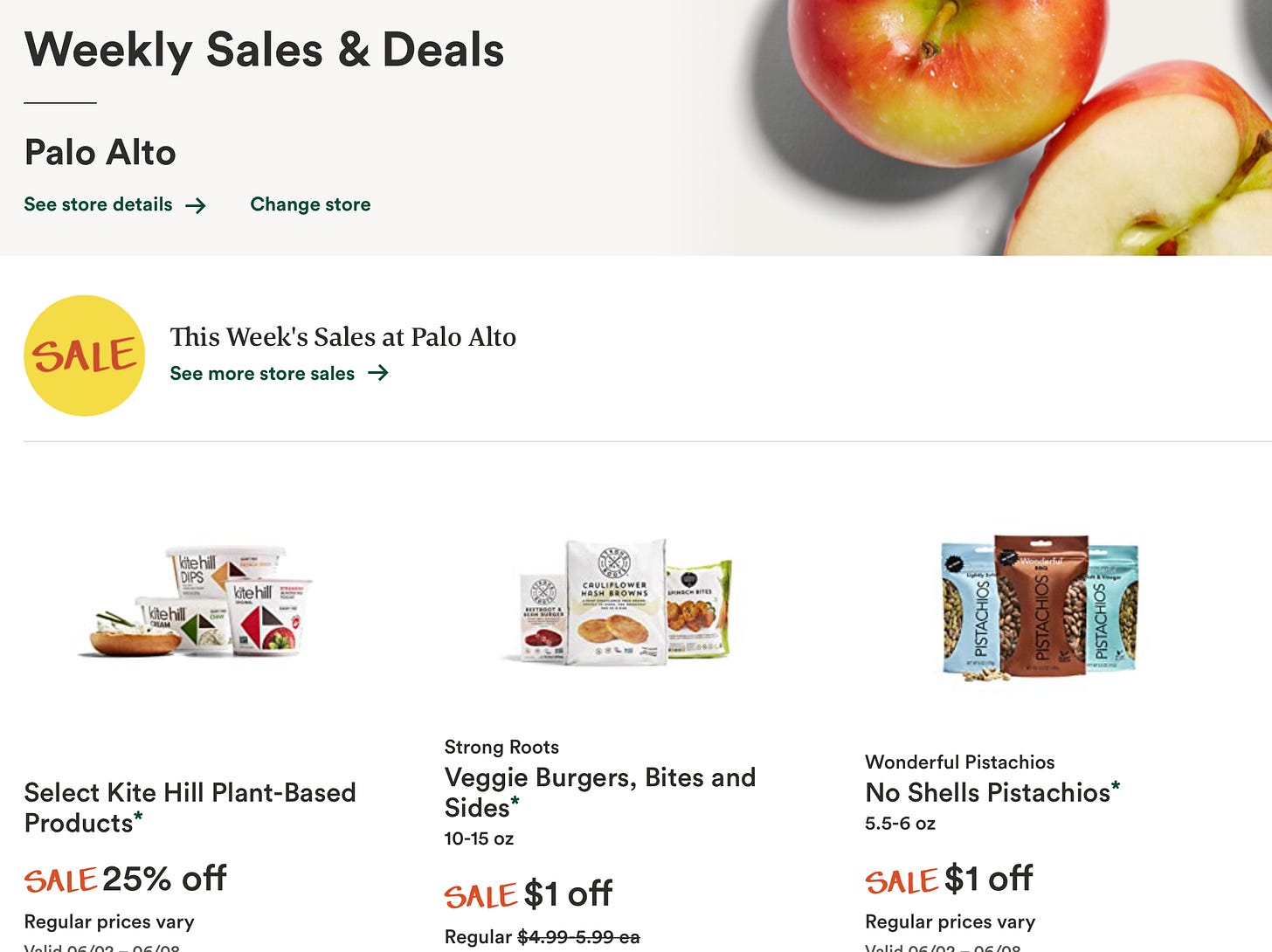

So this is the page we are scraping:

Next: wait I’m confused I want to see the content of the webpage not just the response code? Of course, thats a quick addition but remember - the response code is your friend, it gives information that is helpful.

import requests

import bs4

from bs4 import BeautifulSoup

page_url = "https://www.wholefoodsmarket.com/sales-flyer?store-id=10005"

page_sourced = requests.get(page_url).content #add .content here

print(page_sourced)

'''Output should be: b'\n\n\n\n\n\n\n<!doctype html>\n<html lang="en">\n <head>\n \n \n \n \n \n \n \n \n \n\n <title>Weekly Deals and Sales | Whole Foods Market – Palo Alto</title> etc...'''So, yea now you see why we use BeautifulSoup - try reading the above without smashing your head on the table -_-. We got the webpage though!

Finding the Elements

Pause, this is where I might have a slightly different setup, I use safari but this works in chrome too - you want to find the “inspector”, not this one, rather the one if you right click on the webpage and select “Show Page Source” (on chrome this is called: “Inspect”). You should get a pop up that looks like:

Whoa, lots of information but ok lets move forward and not get stuck, we will cover how to make use of a lot of the information here over the course of the newsletter but let’s start with the basics!

In safari click the “target” icon next to the attention sign seen above.

In chrome click the button in the top left of the information pane that looks like:

OK great so now click on the elements from the page that we want to “save” data from - in this case, an item on sale! (you will see a target box on the page that shows you what you are “selecting” try to find the smallest box around the thing you’re searching)

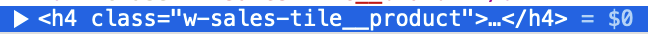

now CLICK! and your inspector will highlight:

in your inspector frame. So now click the down arrow and you’ll see:

Oh my gosh look! Its the text! The Sacred Texts! So, we can pretty much assume since companies pay a lot to developers and their websites are non static that all sales titles will be coded in the same way, so now lets do one last thing before we go back to the code, right click the blue and you will see options, select “Copy” then “Attribute”.

now, paste that attribute in your code in a new line to save it, also write down the “name” of the tag (in this case 'h4’) so you’re code looks like:

import requests

import bs4

from bs4 import BeautifulSoup

page_url = "https://www.wholefoodsmarket.com/sales-flyer?store-id=10005"

page_sourced = requests.get(page_url).content

print(page_sourced)

# Sale Item, h4, class="w-sales-tile__product"

As a best practice I like to write what it is that I am “grabbing” next to it so I can keep track in larger projects.

Now we are ready to use bs4!

The moment we have all been waiting for, BeautifulSoup!

So remember when I said BeautifulSoup helps “read” the html, so lets take a look.

import requests

import bs4

from bs4 import BeautifulSoup

page_url = "https://www.wholefoodsmarket.com/sales-flyer?store-id=10005"

page_sourced = requests.get(page_url).content

html_content = BeautifulSoup(page_sourced, "html.parser")

print(html_content)

# Sale Item, h4, class="w-sales-tile__product"

'''Output:

<!DOCTYPE html>

<html lang="en">

<head>

<title>Weekly Deals and Sales | Whole Foods Market – Palo Alto</title> etc... '''Ok so still not awesome but looks much much better than before, we can get a sense of what this page contains.

but now what - we use the information from the inspected element in a command + f type situation, no seriously this is awesome!

import requests

import bs4

from bs4 import BeautifulSoup

page_url = "https://www.wholefoodsmarket.com/sales-flyer?store-id=10005"

page_sourced = requests.get(page_url).content

html_content = BeautifulSoup(page_sourced, "html.parser")

sale_items = html_content.findAll('h4', class_="w-sales-tile__product")

print(sale_items)

'''Output: [<h4 class="w-sales-tile__product">Select Kite Hill Plant-Based Products<a class="w-link" href="#legalese"><sup aria-label="Click to see the full description and any exclusions and limitations that may apply.">*</sup></a></h4>, etc...'''Amazing, so we get the item we were looking for and if you check the rest of the output, you should see it all there too!

So we use the BeautifulSoup function .findAll - the structure we saved from above is really useful because you can see it come right up in the function .findAll(‘tag’,attribute_=’attribute copied’). Something that I like is always using ‘findAll’ over other options because you always know you have a list and you can immediately start using python knowledge of lists and variables from that one command.

So wait what else do we need to do? Well we are almost there - we just need to get the text and ignore everything else!

Getting the text: sometimes this will be a lot harder but for this time its pretty straight forward - we just need to add a loop through the list above and extract the .text attribute from each element like the following:

import requests

import bs4

from bs4 import BeautifulSoup

page_url = "https://www.wholefoodsmarket.com/sales-flyer?store-id=10005"

page_sourced = requests.get(page_url).content

html_content = BeautifulSoup(page_sourced, "html.parser")

sale_items = html_content.findAll('h4', class_="w-sales-tile__product")

sale_item_titles = [i.text for i in sale_items]

print(sale_item_titles)

'''Output: ['Select Kite Hill Plant-Based Products*', 'Veggie Burgers, Bites and Sides*', etc...'''And so there you have it, you can scrape the Whole Foods sales page! Now this was a long one, and if you made it to the bottom I really appreciate it, if you found this helpful please share it and if you haven’t already subscribe for more, most weeks I will do one long post like this with shorter posts mid week with some more advanced tips for web scrapers with some experience (think tactics to avoid recaptcha, getting structure data, etc.)

As I learn to do this, please do leave a comment so I can improve what I am sending out! I made a Substack so you have a place to come back and reread through the code if need be (if you were wondering)

proud of you!!